The Believing Brain (book by Michael Shermer)

|

|

This post was updated on .

The Believing Brain: From Ghosts, Gods to Politics, Conspiracies: How we construct Beliefs and Reinforce them as Truth 2011 Michael Shermer Founder Publisher of the Skeptic magazine Executive Director of the Skeptics Society in America http://www.michaelshermer.com/the-believing-brain The Believing Brain: Why Science Is the Only Way Out of Belief-Dependent Realism By Michael Shermer | July 5, 2011 http://www.scientificamerican.com/article.cfm?id=the-believing-brain Was President Barack Obama born in Hawaii? I find the question so absurd, not to mention possibly racist in its motivation, that when I am confronted with “birthers” who believe otherwise, I find it difficult to even focus on their arguments about the difference between a birth certificate and a certificate of live birth. The reason is because once I formed an opinion on the subject, it became a belief, subject to a host of cognitive biases to ensure its verisimilitude. Am I being irrational? Possibly. In fact, this is how most belief systems work for most of us most of the time. We form our beliefs for a variety of subjective, emotional and psychological reasons in the context of environments created by family, friends, colleagues, culture and society at large. After forming our beliefs, we then defend, justify and rationalize them with a host of intellectual reasons, cogent arguments and rational explanations. Beliefs come first; explanations for beliefs follow. In my new book The Believing Brain (Holt, 2011), I call this process, wherein our perceptions about reality are dependent on the beliefs that we hold about it, belief-dependent realism. Reality exists independent of human minds, but our understanding of it depends on the beliefs we hold at any given time. I patterned belief-dependent realism after model-dependent realism, presented by physicists Stephen Hawking and Leonard Mlodinow in their book The Grand Design (Bantam Books, 2011). There they argue that because no one model is adequate to explain reality, “one cannot be said to be more real than the other.” When these models are coupled to theories, they form entire worldviews. Once we form beliefs and make commitments to them, we maintain and reinforce them through a number of powerful cognitive biases that distort our percepts to fit belief concepts. Among them are: Anchoring Bias. Relying too heavily on one reference anchor or piece of information when making decisions. Authority Bias. Valuing the opinions of an authority, especially in the evaluation of something we know little about. Belief Bias. Evaluating the strength of an argument based on the believability of its conclusion. Confirmation Bias. Seeking and finding confirming evidence in support of already existing beliefs and ignoring or reinterpreting disconfirming evidence. On top of all these biases, there is the in-group bias, in which we place more value on the beliefs of those whom we perceive to be fellow members of our group and less on the beliefs of those from different groups. This is a result of our evolved tribal brains leading us not only to place such value judgment on beliefs but also to demonize and dismiss them as nonsense or evil, or both. Belief-dependent realism is driven even deeper by a meta-bias called the bias blind spot, or the tendency to recognize the power of cognitive biases in other people but to be blind to their influence on our own beliefs. Even scientists are not immune, subject to experimenter-expectation bias, or the tendency for observers to notice, select and publish data that agree with their expectations for the outcome of an experiment and to ignore, discard or disbelieve data that do not. This dependency on belief and its host of psychological biases is why, in science, we have built-in self-correcting machinery. Strict double-blind controls are required, in which neither the subjects nor the experimenters know the conditions during data collection. Collaboration with colleagues is vital. Results are vetted at conferences and in peer-reviewed journals. Research is replicated in other laboratories. Disconfirming evidence and contradictory interpretations of data are included in the analysis. If you don’t seek data and arguments against your theory, someone else will, usually with great glee and in a public forum. This is why skepticism is a sine qua non of science, the only escape we have from the belief-dependent realism trap created by our believing brains. ... |

|

|

This post was updated on .

TED: Michael Shermer: 懷疑論破迷信(中文字幕)

http://youtu.be/tIjgVk79rlg (不準確的原來標題:《創意的起源是好奇》) 演講者麥可‧薛莫(Michael Shermer)是懷疑論者協會理事長。這個協會調查聲稱超自然現象和偽科學。因為懷疑,而去推翻很多無稽之談,就像警察局的詐騙小組進行掃除工作一樣。在這段演講裡,麥可舉了很多例子來跟大家說明懷疑的心是很需要的。 Alex's comment: 懷疑論的價值在於能拆穿迷信和偽科學。拆穿「智慧設計」或「神蹟」很容易,只要問「可以解釋清楚一點嗎?」。其實「智慧設計」或「神蹟」沒有解釋到甚麼,它們的內容是空白的。講者也舉了視覺錯覺為例子,解釋迷信的形成。大腦進化到企圖從任何圖案中找尋臉孔,因為辨認臉孔的身份對生存很重要。所以我們容易把一些圖案誤認為臉孔:從佛陀、觀音,到耶穌、聖母瑪利亞都有。這是迷信的成因之一。 |

|

|

This post was updated on .

In reply to this post by Alex

How Skeptics Can Break the Cycle of False Beliefs

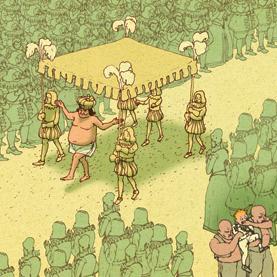

Pluralistic ignorance and the last best hope on Earth By Michael Shermer March 19, 2013 Scientific American http://www.scientificamerican.com/article.cfm?id=how-skeptics-break-the-cycle-of-false-beliefs http://www.michaelshermer.com/2013/03/dictators-and-diehards  The broader psychological principle at work here is “pluralistic ignorance,” in which individual members of a group do not believe something but mistakenly believe everyone else in the group believes it. When no one speaks up, it produces a “spiral of silence” that can lead to everything from binge drinking and hooking up to witch hunts and deadly ideologies. The broader psychological principle at work here is “pluralistic ignorance,” in which individual members of a group do not believe something but mistakenly believe everyone else in the group believes it. When no one speaks up, it produces a “spiral of silence” that can lead to everything from binge drinking and hooking up to witch hunts and deadly ideologies.

When you add an element of punishment for those who challenge the norm, pluralistic ignorance can transmogrify into purges, pogroms and repressive political regimes. European witch hunts, like their Soviet counterparts centuries later, degenerated into preemptive accusations of guilt, lest one be thought guilty first. Fortunately, there is a way to break this spiral of ignorance: knowledge and communication. This is why totalitarian and theocratic regimes restrict speech, press, trade and travel and why the route to breaking the bonds of such repressive governments and ideologies is the spread of liberal democracy and open borders. This is why even here in the U.S.—the land of the free—we must openly endorse the rights of gays and atheists to be treated equally under the law and why “coming out” helps to break the spiral of silence. Knowledge and communication, especially when generated by science and technology, offer our last best hope on earth. Alex's comment: Salvation by science, knowledge, and communication. Quite contrary to Martin Luther's "salvation by faith" assertion back in the 16th century, it may be skepticism, the very opposite of faith, that saves humanity from deadly ideologies. 因科學、知識、和通訊得救。與馬丁路德早於十六世紀的斷言「因信得救」相反,真正能拯救人類脫離殺人意識形態的,可能正正就是「信」的相反—懷疑。 |

|

|

This post was updated on .

In reply to this post by Alex

Why We Should Choose Science over Beliefs

by Michael Shermer September 24, 2013 Scientific American http://www.scientificamerican.com/article.cfm?id=why-we-should-choose-science-over-beliefs Ideology needs to give way "Like most people who hold strong ideological convictions, I find that, too often, my beliefs trump the scientific facts. This is called motivated reasoning, in which our brain reasons our way to supporting what we want to be true. Knowing about the existence of motivated reasoning, however, can help us overcome it when it is at odds with evidence." "all of us are subject to the psychological forces at play when it comes to choosing between facts and beliefs when they do not mesh. In the long run, it is better to understand the way the world really is rather than how we would like it to be." |

«

Return to Atheism, Humanism, Naturalism 無神論、人文主義、自然主義

|

1 view|%1 views

| Free forum by Nabble | Edit this page |